Bringing AI-Powered Efficiency to Mortgage Operations

Services provided

Custom AI Platform Development

Product Strategy

LLM Integration

Retrieval-Augmented Generation (RAG) Architecture

Agent-Oriented System Design

Region

USA

The Client

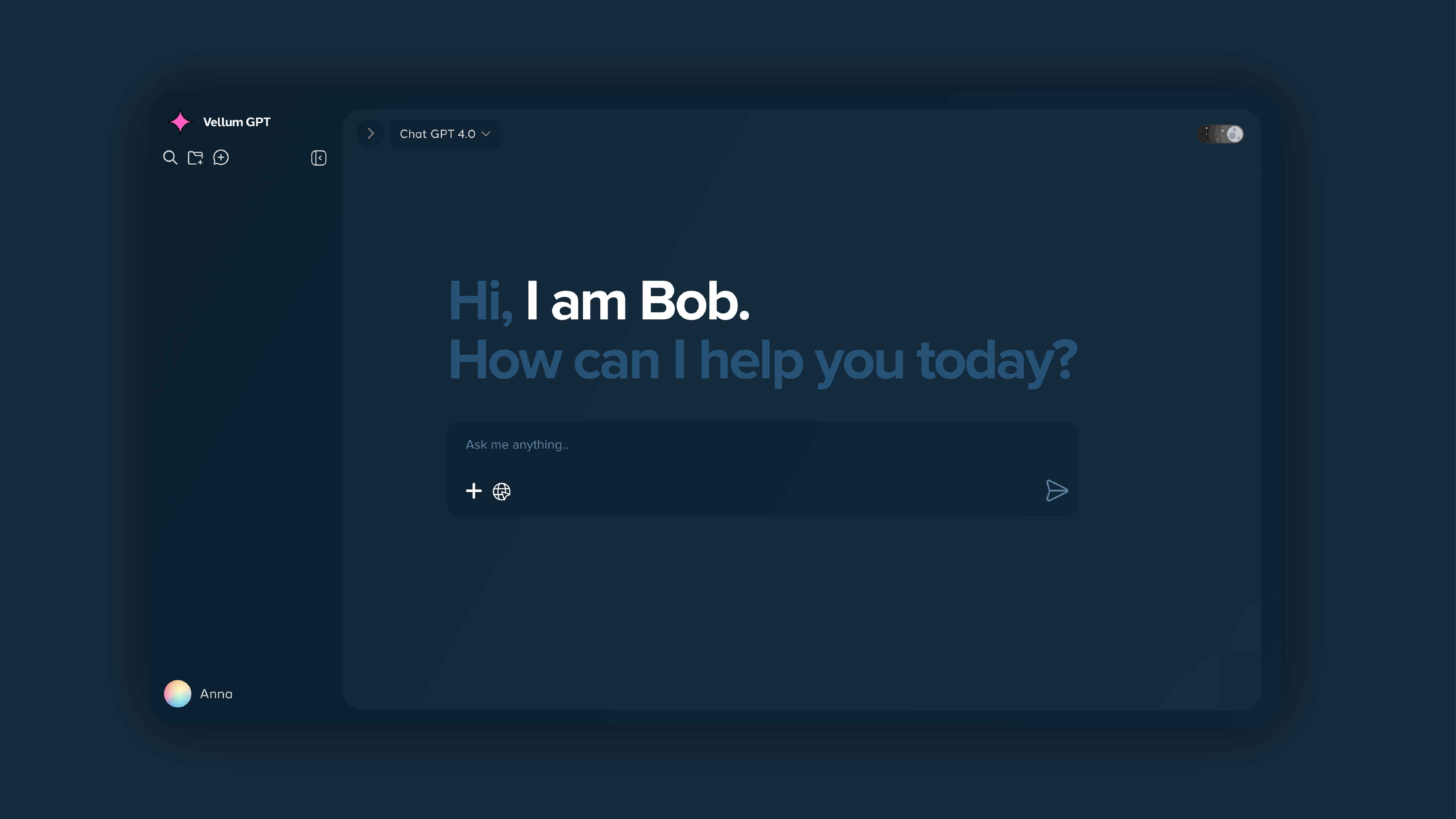

Vellum Mortgage is a U.S.-based mortgage company dedicated to making the home financing process faster, smarter, and more accessible. With a focus on customer experience and operational efficiency, Vellum leverages modern technology to support both borrowers and mortgage professionals.

The company continues to innovate within a highly regulated industry, balancing compliance with cutting-edge digital solutions.

What we did

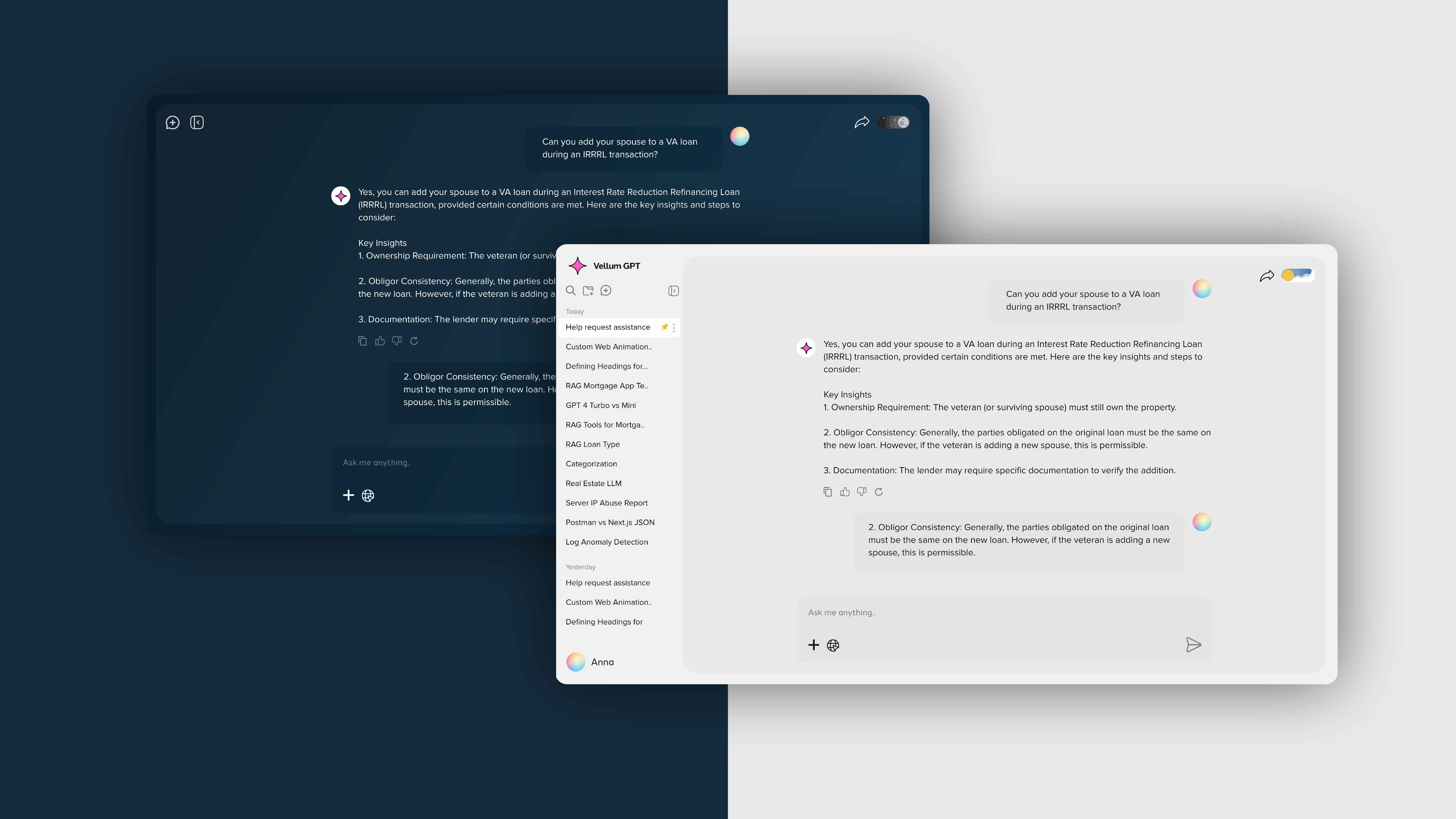

We partnered with Vellum Mortgage to help reimagine how underwriters and loan officers handle complex, policy-heavy questions in a fast-paced, high-stakes industry. Together, we developed a Retrieval-Augmented Generation (RAG) platform, powered by domain-specific AI agents, to reduce bottlenecks in the underwriting process.

Our role involved designing and implementing a scalable, AI-driven system that could instantly surface context-aware, compliant answers from a corpus of over 200 investor, agency, and internal documents.

Brains Behind the Bot:

Crafting a Mortgage-Savvy AI

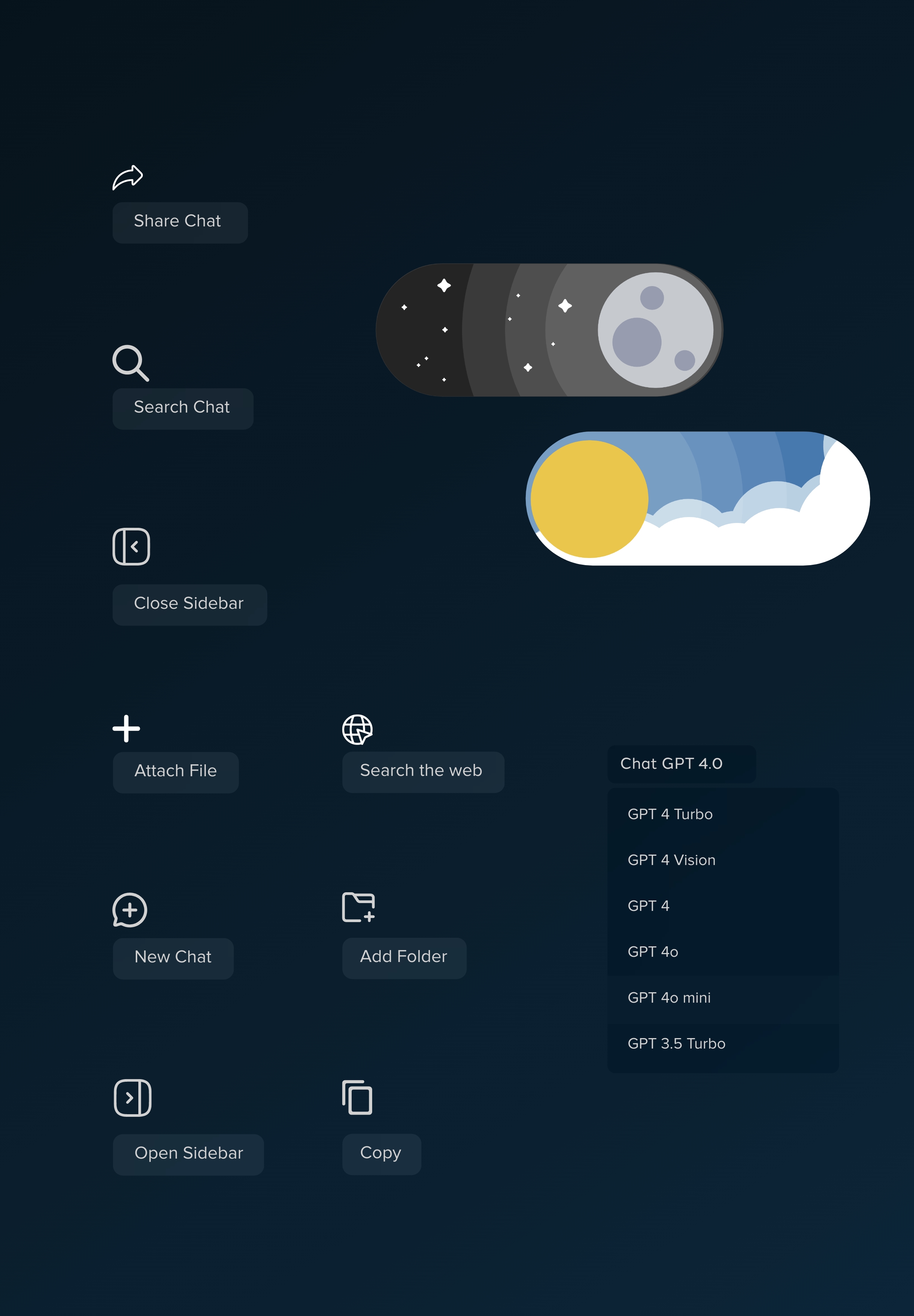

At the heart of Vellum GPT is a multi-agent AI architecture, designed specifically to navigate the complexity of the U.S. mortgage industry. Using a Retrieval-Augmented Generation (RAG) approach, we trained the system to understand, retrieve, and respond to queries across investor guidelines, agency documents, internal policies, and loan workflows.

Agents were built with clear specialisations. Investor & Agency Agents pull answers from government and investor documentation, Policy Agents surface internal guidelines, and Tooling Agents assist with workflow support and software usage. Powered by LangChain, these agents are orchestrated with memory, contextual awareness, and intelligent routing, making each response highly relevant and traceable.

We combined Pinecone for fast, semantic document retrieval with OpenAI and Cohere for accurate, context-rich generative responses. This fusion of vector search and LLMs allows the system to provide instant, compliant, and fully sourced answers, significantly reducing the time underwriters spend on repetitive tasks.

Steel Beneath the Surface:

Tech Built for Trust, Speed & Scale

To bring this platform to life, we engineered a modular, scalable backend using Python and TypeScript, optimised for real-time performance and data orchestration. The system handles agent logic, document ingestion, chat logging, and automated updates for over 200+ evolving documents.

Structured data and user sessions are managed via Postgres, while Redis accelerates high-frequency queries. The entire platform is containerised and deployed via Docker and ECS, with Elastic Load Balancing, VPC, and CloudWatch ensuring consistent performance and visibility across services.

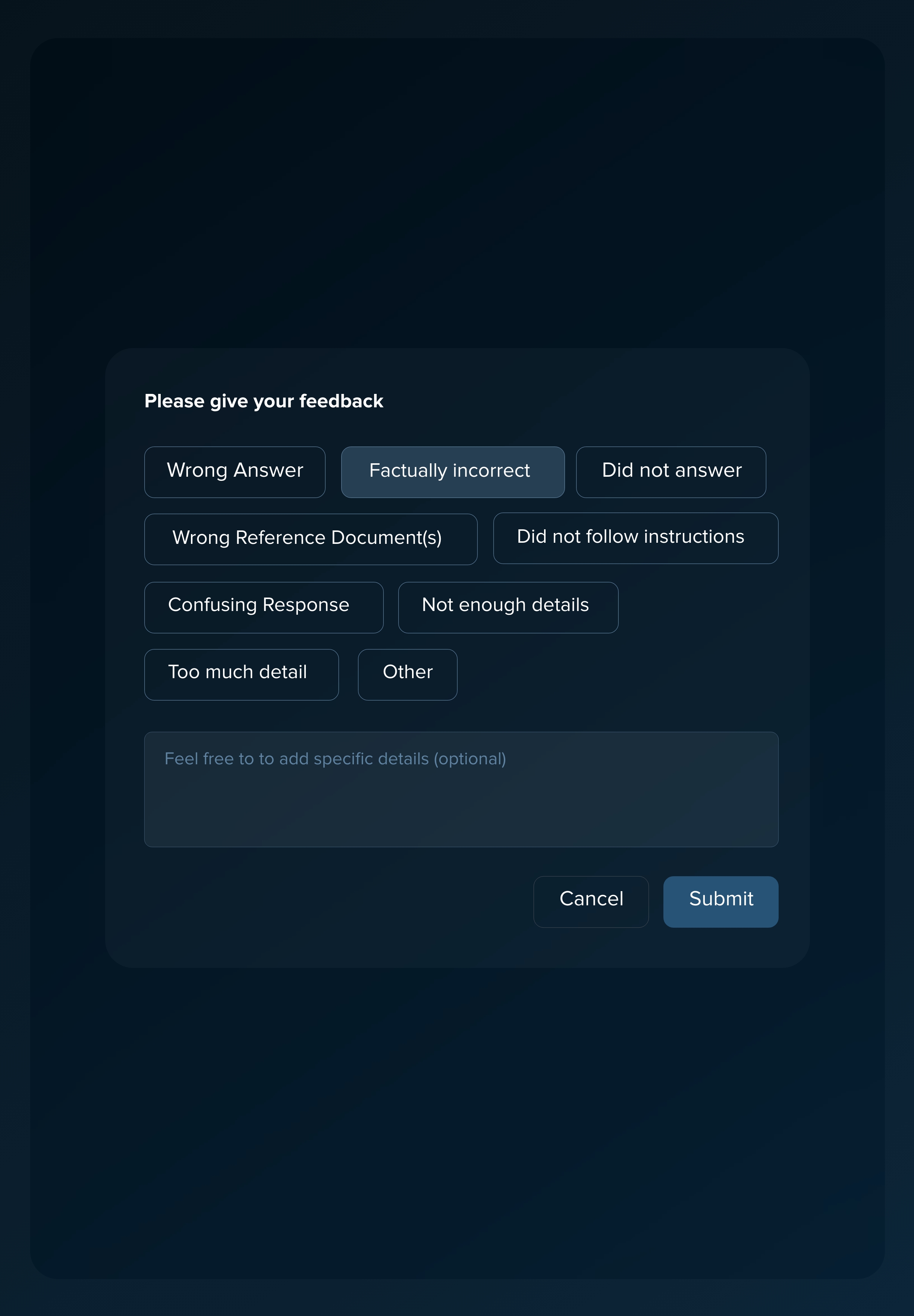

Security and compliance were central from day one. We implemented role-based access control, AWS Cognito for authentication, and encrypted storage using KMS and Secrets Manager. Activity logs and system behavior are continuously tracked through CloudTrail and PINO Logs, while AI moderation and usage tracking ensure every response adheres to industry standards.

By combining intelligent AI systems with enterprise-grade architecture, Vellum GPT delivers the speed of automation with the trust and accountability that the mortgage industry demands.

The Ongoing Partnership

We’ve been working closely with Vellum for over three years, contributing to various product initiatives across their digital ecosystem. Our collaboration on Vellum GPT began over a year ago, and since then, we’ve continued to provide technical support, feature enhancements, and system optimisations as the product evolves.

As Vellum pushes the boundaries of what’s possible in mortgage technology, we remain a dedicated partner, helping scale and strengthen their platforms with every iteration.